Analyzing Bitcoin Drip Patterns in BTCUSDT: Insights from 2021 to April 2025

Sukrit Sunama 7 months ago

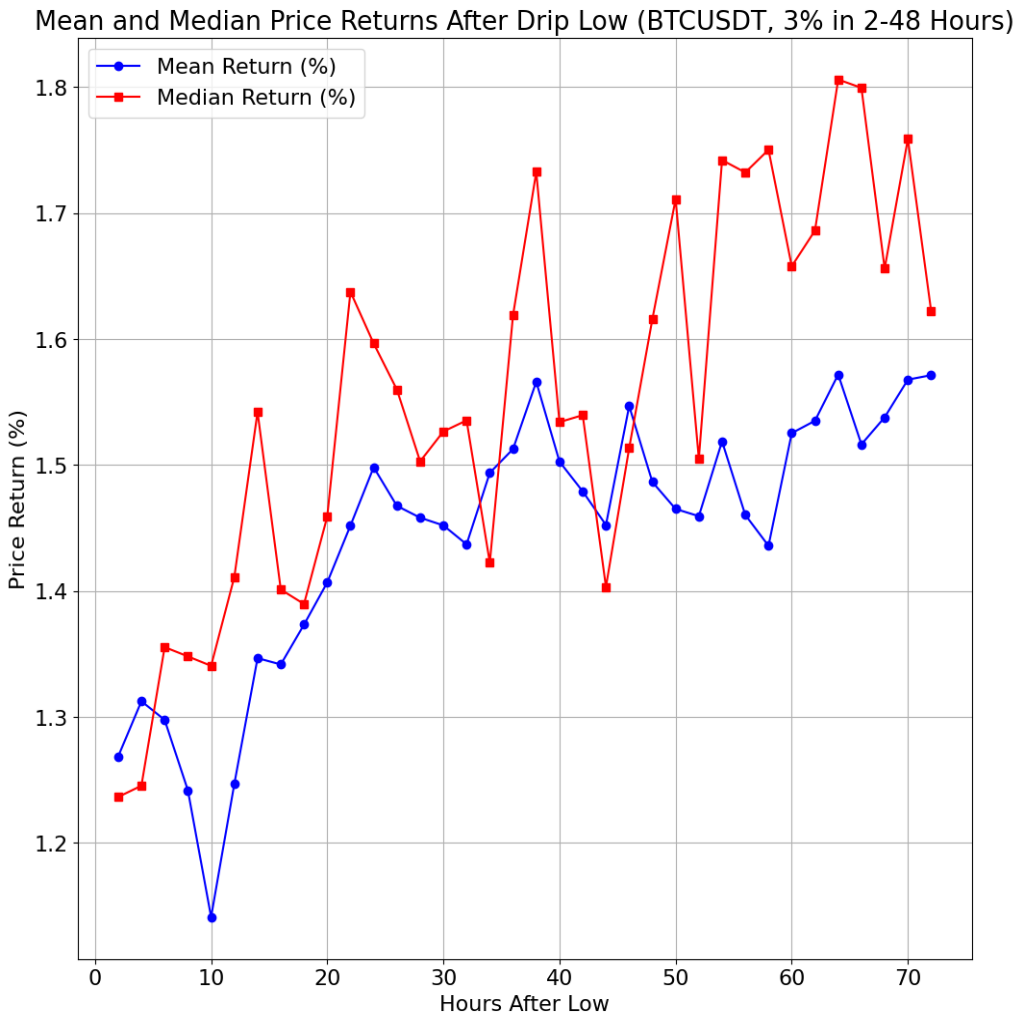

In the volatile world of cryptocurrency trading, understanding price patterns can provide valuable insights for traders and analysts. A "Drip" in BTCUSDT is defined as a rapid price decline of at least 3% within a 6-to-48-hour window, with specific constraints on volatility in positive hourly candles (high-to-low not exceeding 2% in bullish candles). This analysis explores the characteristics, frequency, and behavior of Drips from January 2021 to April 2025, using hourly data to uncover patterns in their occurrence, size, and post-Drip price movements. We also examine technical indicators at the start and bottom of Drips to identify potential signals for traders....

Moonshots in Bitcoin: Riding the Price Surges to All-Time Highs (2017-2025)

Sukrit Sunama 7 months ago

In the electrifying realm of cryptocurrency, Moonshots represent those heart-pounding moments when Bitcoin (BTCUSDT) surges rapidly, capturing the attention of traders and investors alike. But how significant are these price spikes, and do they propel Bitcoin to its All-Time Highs (ATHs)? In this data-driven exploration, we analyze eight years of hourly BTCUSDT data (mid-2017 to mid-2025) to dissect Moonshots—defined as price increases ≥ 5% within 12 hours, with lows not dropping more than 3%—and their role in Bitcoin’s historic peaks. We’ll also contrast Moonshots with their opposite, Drips, and explore seasonal patterns, connecting these findings to Bitcoin’s storied past to offer insights for enthusiasts. Dive in with us!...

Understanding Bitcoin Price Drips and How to Handle Them

Sukrit Sunama 7 months ago

The first night I bought BTC in 2018 to pay for my mining ASIC was the night I discovered just how unpredictable Bitcoin’s price can be—and how it can affect your sleep. I remember waking up at 3 a.m. to use the bathroom, only to find myself glued to the screen, watching the crypto market. That night, BTC’s price had dropped 6% since I went to bed. I’m pretty sure most of my readers have experienced something similar at least once!

That was the moment I started thinking deeply about price drops—what caused them, what might happen next, and when those big moves might occur. Let’s call this phenomenon a “Price Drip.”...

Understanding AI Trading Agents: How Reinforcement Learning Powers Automated Trading

Sukrit Sunama 9 months ago

Revised & Improved Version:

When people hear "AI," they often think of ChatGPT or other generative AI models categorized as LLMs (Large Language Models). However, a new AI paradigm is gaining attention—Agent AI, which I’ll explore in this article.

The Four Categories of AI

Before diving into Agent AI, let's first classify AI based on how it is trained and its purpose:

- Supervised AI – This type of AI learns from labeled data, meaning humans (or other intelligent entities) provide direct input-output mappings. Common examples include email spam classification and image recognition. Interestingly, the first version of ChatGPT was a supervised AI model, requiring human-written questions and answers for training.

- Unsupervised AI – Unlike supervised AI, this category doesn't require manually labeled data. It learns from patterns in raw data. Examples include image recognition for identifying individuals and the latest versions of ChatGPT, which were train...

Building Statera: My 3-Year Journey to Creating a Profitable Crypto Trading Agent

Sukrit Sunama 9 months ago

This is the story of how I built Statera, my first AI-powered trading agent. But before diving into the journey, let’s first understand what a Statera agent is.

What is Statera?

Statera is an AI trading agent designed for spot trading BTCUSDT. Its primary goal is to generate profits exceeding the simple buy-and-hold strategy while managing risks effectively. Sounds simple, right? But in reality, it took me three years to develop a version that consistently generates profits while minimizing losses.

The Beginning: A Flawed Approach (2022)

In 2022, I had the idea of using AI to trade crypto instead of relying on manual strategies, which felt no different from gambling. I began studying algorithmic trading and quickly discovered that technical analysis was widely regarded as the key to success.

However, after extensive manual and algorithmic ...

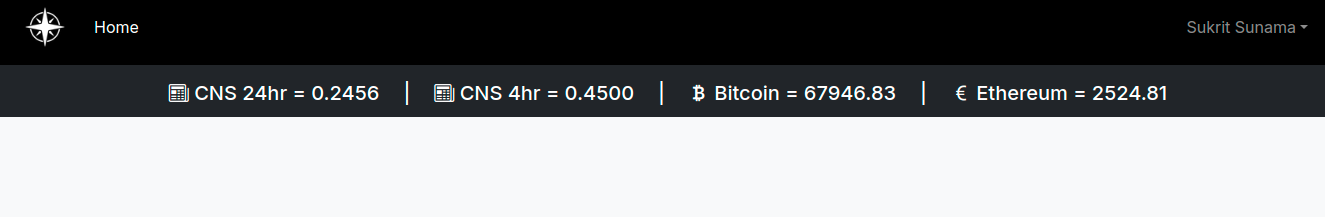

What is CNS on the top of this website?

Sukrit Sunama 9 months ago

Crypto News Sentiment (CNS) is a crucial indicator for cryptocurrency traders. It offers real-time insights into the overall sentiment surrounding the crypto market, derived from a comprehensive analysis of news articles.

In this post, I'll delve into:

- How CNS is calculated using advanced AI techniques.

- The significance of CNS as an independent variable influencing market prices.

- The practical applications of CNS for informed trading decisions.

By understanding CNS, you can gain a competitive edge and make more strategic trades in the dynamic crypto market. To calculate Crypto News Sentiment (CNS), a sophisticated AI bot scours numerous crypto-related news sources. This bot analyzes the content of these articles, extracting sentiment values categorized as positive, negative, or neutral. To provide a comprehensive overview, the individual sentiment values are then aggregated into a single score ranging from -1 (strongly negative) to 1 (strongly positive). This aggr...